Random forest regression

Random forest regression fits a model that uses an ensemble of decision trees to estimate a continuous variable.

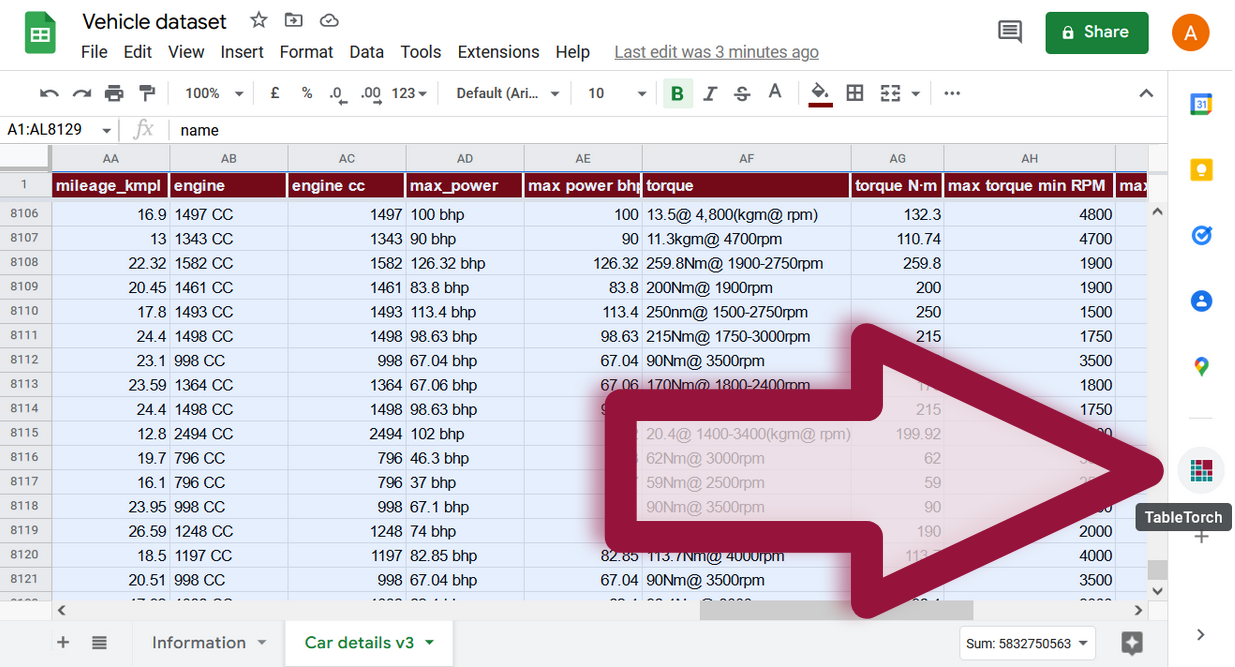

The video below shows how to use TableTorch to apply the random forest regression algorithm to fit a model of a used car price right inside Google Sheets.

Watch on YouTube: Random forest regression in Google Sheets with TableTorch 5:56

TableTorch supports multiple options for random forest models:

- number of decision trees;

- single tree’s maximum depth;

- maximum features limit per tree;

- selecting features with replacement (i.e. using same feature multiple times in the same tree).

For regression tasks, TableTorch supports:

- Inserting a prediction column.

- Adding a learning summary sheet, containing:

- learning options;

- overall model quality metrics:

- root mean squared error;

- mean absolute error;

- mean absolute percentage error;

- median absolute error;

- median absolute percentage error;

- R²;

- fraction of variance unexplained;

- first 50 trees overview, explaining if-else conditions inside the trees.

Prediction columns contain formulas ready to be used for the new data.

Start TableTorch

- Install TableTorch to Google Sheets via Google Workspace Marketplace. More details on initial setup.

- Click on the TableTorch icon

on right-side panel of Google Sheets.

Random forest options

Random forest is an ensemble model that fits multiple decision trees. Each tree is trained on a limited number of features. Varying its learning options can help to produce a more precise and resilient model.

Number of decision trees

Up to a certain limit, the greater is this number, the lesser is the variance of predictions, i.e. they become more stable and the model fitting is less likely to overfit.

Single tree’s maximum depth

The greater is this number, the deeper the resulting trees of the random forest may become. This may improve precision of the model, however it may also result in overfitting, especially if the training dataset is not very large.

Maximum features limit per tree

Varies how many features out of the source dataset each tree can use to train on. Increasing this number is likely to produce a more precise model but can also result in greater variance and higher chance of overfitting, especially when there is not enough data.

Selecting features with replacement

Enabling this option allows features to appear more than once in the dataset samples used to train particular trees. This may reduce prediction variance.

See also:

Google, Google Sheets, Google Workspace and YouTube are trademarks of Google LLC. Gaujasoft TableTorch is not endorsed by or affiliated with Google in any way.

Let us know!

Thank you for using or considering to use TableTorch!

Does this page accurately and appropriately describe the function in question? Does it actually work as explained here or is there any problem? Do you have any suggestion on how we could improve?

Please let us know if you have any questions.

- E-mail: ___________

- Facebook page

- Twitter profile